Richard Rorty’s William E. Massey Sr. Lectures in the History of American Civilization have been rediscovered, almost two decades after they were delivered, in light of the election of Donald Trump as the 45th President of the United States. And with good reason. For those of us who watched the 2016 election night results materialize in utter stupefaction and horror, Rorty would inveigh that the Left has been flippantly ignoring the potential for a Trump presidency since the collapse of the leftist reform movement in the mid-1960s. This will seem counter-intuitive to many, as the 1960s are now ingrained in the cultural consciousness as the apotheosis of the American Left. Rorty, however, draws a distinction between earlier 20th century iterations of leftist activism (which were were bonded with workers’ unions and won political victories that furthered social progress and fair standards of labor) with the cultural politics that became the driving force of the Counter-Culture Revolution, and which dominates leftist discourse to this day. The crucial distinction, in Rorty’s words, is between “agents and spectators.”

Richard Rorty’s William E. Massey Sr. Lectures in the History of American Civilization have been rediscovered, almost two decades after they were delivered, in light of the election of Donald Trump as the 45th President of the United States. And with good reason. For those of us who watched the 2016 election night results materialize in utter stupefaction and horror, Rorty would inveigh that the Left has been flippantly ignoring the potential for a Trump presidency since the collapse of the leftist reform movement in the mid-1960s. This will seem counter-intuitive to many, as the 1960s are now ingrained in the cultural consciousness as the apotheosis of the American Left. Rorty, however, draws a distinction between earlier 20th century iterations of leftist activism (which were were bonded with workers’ unions and won political victories that furthered social progress and fair standards of labor) with the cultural politics that became the driving force of the Counter-Culture Revolution, and which dominates leftist discourse to this day. The crucial distinction, in Rorty’s words, is between “agents and spectators.”

In the first lecture, “American National Pride: Whitman and Dewey,” Rorty uses those two eminent American philosophers as counterweights to the pervading modern influence of fatalist Europeans such as Foucault, Heidegger, Lacan, and Derrida. What Rorty sees as a source of inspiration in Whitman and Dewey is their ability to reconcile the potential for American national pride with their secular liberal humanism. Rorty makes what I found to be a provoking argument: that leftists who view the most shameful events of American history, such as the American Indian genocide or the killing of over a million Vietnamese, as irrevocable stains on the United States, events so grave atonement is impossible, fall into the trappings of a metaphysical sinner complex better suited to the followers of St. Augustine. Dewey, by contrast, “wanted Americans to share a civic religion that substituted utopian strivings for claims to theological knowledge” (38). Instead, on the wings of Foucault and Lacan, American leftists have become apocalyptic martyrs and the rationalizers of hopelessness.

The second lecture, “The Eclipse of the Reformist Left,” is a retelling through Rorty’s eyes of how the cultural Left, fueled by university unrest, usurped the reformist Left in the mid-60s. He opens with a bold proclamation: Marxism at the end of the 20th century is as morally bankrupt as Roman Catholicism at the end of the 17th century. Rorty proudly proclaims himself an anti-Marxist, and levels language as strong as any right-winger’s against Stalin’s “evil empire” and its insidious global influence. He offers a defense of the Congress for Cultural Freedom (of which his father was a member), which the New York Times exposed as a CIA outfit in 1966. The CCF’s endowment is still a point of contention for cultural leftists who were molded intellectually in the mid-20th century (I most recently came across an ominous allusion in a review of Matthew Spender’s 2015 memoir, in reference to his father the poet Stephen Spender, editor of the CCF-funded literary magazine Encounter). Rorty agrees with Todd Gitlin that the watershed moment for the splintering of the Left occurred in August 1964, when the Mississippi Freedom Democratic Party was denied seats at the DNC in Atlantic City, and Congress passed the Tonkin Gulf Resolution. Young leftists were left with an impression of their country as inherently stained, corrupt, and irreparable, thus bringing an end to the leftist reformism that defined the so-called Progressive Era. In place of reforms, the New Left called for revolution. This type of thinking is perhaps best illustrated in Rorty’s critique of Christopher Lasch’s 1969 polemic, The Agony of the American Left, a book which “made it easy to stop thinking of oneself as a member of a community, as a citizen with civil responsibilities. For if you turn out to be living in an evil empire (rather than, as you had been told, a democracy fighting an evil empire), then you have no responsibility to your country, you are accountable only to humanity” (66).

For those readers who are most curious about Rorty’s anticipation of Trump, skip to the third and final lecture, “A Cultural Left.” This lecture will be a bitter pill for many young academics to swallow, ensconced as they are in the rhetoric of identity politics (I write this as a recent humanities Ph.D. dropout). Rorty’s diagnosis of the current malaise requires something the cultural Left deplores: nuance. While he praises the advances the New Left has made in curbing the dominance of stigma and sadism (racism, sexism, homophobia, etc.) in American culture, he draws attention to the glaring fact that, while we have made much headway in social equality since the 1960s, economic inequality has increased in tandem. It’s as if the Left lacks the focus to address dual initiatives. While recent achievements in socio-cultural progress have been nothing short of heroic, by taking our eyes off the fight for socialist economic reforms, we were effectively asleep at the wheel in the 1980s as the Reaganite neo-liberals waged their campaign of annihilation on the meager welfare state accomplished by the reformist Left. By the end of the century, we had also lost the minds of the voters, and the only democrats who stood a chance at national election were the advocates of lukewarm centrism like Bill Clinton.

So, the reader must ask, if the Left has proven historically unable to pursue multiple objectives simultaneously, then which is more important: cultural or economic reforms? Rorty, while not dismissing the fight against all forms of cultural prejudice, warns that a globalized economy run by an entrepreneurial elite is far more likely to upend the American political system and augur an authoritarian future. For, as he rightly points out, many of the Progressive Era champions of socialist economic reforms—labor union members, farmers, unskilled workers—undoubtedly included many racists, sexists, and homophobes. However, by coalescing those groups around the banner of shared economic interests, leftists were able to achieve upward social mobility for all Americans. By contrast, in the absence of a strong, politicized movement for workers’ rights and fair wages, the white working class, prone as we all are to tribalism, will inevitably fall under the spell of populism. Enter Trump:

…members of labor unions, and unorganized unskilled workers, will sooner or later realize that their government is not even trying to prevent wages from sinking or to prevent jobs from being exported. Around the same time, they will realize that suburban white-collar workers—themselves desperately afraid of being downsized—are not going to let themselves be taxed to provide social benefits for anyone else.

At that point, something will crack. The nonsuburban electorate will decide that the system has failed and start looking around for a strongman to vote for—someone willing to assure them that, once he is elected, the smug bureaucrats, tricky lawyers, overpaid bond salesmen, and postmodernist professors will no longer be calling the shots. A scenario like that of Sinclair Lewis’ novel It Can’t Happen Here may then be played out. For once such a strongman takes office, nobody can predict what will happen. In 1932, most of the predictions made about what would happen if Hindenburg named Hitler chancellor were wildly optimistic. (90)

The election of Donald Trump is a travesty, though one we should have seen coming. My initial reaction (disgust, hopelessness, defeat) is emblematic of the dangerous sense of resignation that made it possible for the Far-right to consume every branch of the American government in the first place. Those of us on the Left now have two options: give in to our anguish and become spectators to the dismantling of a century’s worth of progress, or once again become agents for change. We should never forget the more sinister chapters of America’s past, but in recognizing the potential for even darker days ahead, our energy is best spent not in doleful reflection, but in working to achieve a country worthier of our pride. As Rorty writes, “we should not let the abstractly described best be the enemy of the better” (105).

Perhaps mercifully, Richard Rorty did not live to see the events he predicted transpire. He finished his career at Stanford University, and died in 2007 at the age of 75.

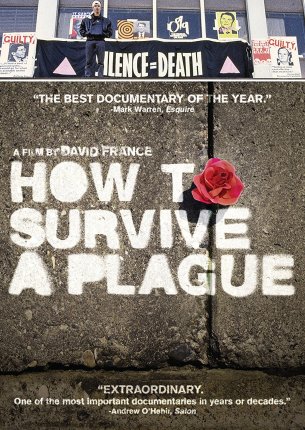

In November 2016, Knopf published David France’s How to Survive a Plague: The Inside Story of How Citizens and Science Tamed AIDS. While I certainly intend to read the book, my nightstand is currently overflowing with volumes. In the meantime, I opted to watch France’s documentary by the same title, which preceded the book by four years and inspired its publication.

In November 2016, Knopf published David France’s How to Survive a Plague: The Inside Story of How Citizens and Science Tamed AIDS. While I certainly intend to read the book, my nightstand is currently overflowing with volumes. In the meantime, I opted to watch France’s documentary by the same title, which preceded the book by four years and inspired its publication. Timothy Snyder’s history of the Holocaust is situated within the framework of his academic speciality: (post)modern Eastern Europe. Snyder’s previous work of popular history, Bloodlands: Europe Between Hitler and Stalin (2013), focused on the hellish reality of Eastern European countries caught between two mass murdering empires in WWII. Black Earth can be read as an extension of that project, only from the perspective of the 5.5 million Jews murdered by Germany in the stateless zones of Eastern Europe. Perhaps unavoidably when one considers the tangled history of the states in question, the reader risks getting lost in the minutia of rival political parties, internal schisms, disputed borders, and ancient ethnic prejudices. Yet despite the expository density, the central tenets of the book are repeated frequently and clearly enough to be condensed easily.

Timothy Snyder’s history of the Holocaust is situated within the framework of his academic speciality: (post)modern Eastern Europe. Snyder’s previous work of popular history, Bloodlands: Europe Between Hitler and Stalin (2013), focused on the hellish reality of Eastern European countries caught between two mass murdering empires in WWII. Black Earth can be read as an extension of that project, only from the perspective of the 5.5 million Jews murdered by Germany in the stateless zones of Eastern Europe. Perhaps unavoidably when one considers the tangled history of the states in question, the reader risks getting lost in the minutia of rival political parties, internal schisms, disputed borders, and ancient ethnic prejudices. Yet despite the expository density, the central tenets of the book are repeated frequently and clearly enough to be condensed easily. Richard Rorty’s William E. Massey Sr. Lectures in the History of American Civilization have been rediscovered, almost two decades after they were delivered, in light of the election of Donald Trump as the 45th President of the United States. And with good reason. For those of us who watched the 2016 election night results materialize in utter stupefaction and horror, Rorty would inveigh that the Left has been flippantly ignoring the potential for a Trump presidency since the collapse of the leftist reform movement in the mid-1960s. This will seem counter-intuitive to many, as the 1960s are now ingrained in the cultural consciousness as the apotheosis of the American Left. Rorty, however, draws a distinction between earlier 20th century iterations of leftist activism (which were were bonded with workers’ unions and won political victories that furthered social progress and fair standards of labor) with the cultural politics that became the driving force of the Counter-Culture Revolution, and which dominates leftist discourse to this day. The crucial distinction, in Rorty’s words, is between “agents and spectators.”

Richard Rorty’s William E. Massey Sr. Lectures in the History of American Civilization have been rediscovered, almost two decades after they were delivered, in light of the election of Donald Trump as the 45th President of the United States. And with good reason. For those of us who watched the 2016 election night results materialize in utter stupefaction and horror, Rorty would inveigh that the Left has been flippantly ignoring the potential for a Trump presidency since the collapse of the leftist reform movement in the mid-1960s. This will seem counter-intuitive to many, as the 1960s are now ingrained in the cultural consciousness as the apotheosis of the American Left. Rorty, however, draws a distinction between earlier 20th century iterations of leftist activism (which were were bonded with workers’ unions and won political victories that furthered social progress and fair standards of labor) with the cultural politics that became the driving force of the Counter-Culture Revolution, and which dominates leftist discourse to this day. The crucial distinction, in Rorty’s words, is between “agents and spectators.”